download from here !!!

For more info on VS 2010 : click Me

For more info on C# 4.0 : click Me

Saturday, May 30, 2009

Friday, May 15, 2009

Batch 124 - St Theresa College of Engineering

A new batch started today. Thought a discussion on C language

will give them a solid foundation as well as

rekindle an interest in programming/coding.

Some of the topics discussed are...

1. size of int in C, how its based on the underlying architecture.

2. behavior[floating value] of float and double types and why the peculiar names.

3. printf function - far pointer - video memory - 0xB0008000

4. dangling pointer, memory leak situation

5. value types / reference types

6. benefits of 'passing by reference'

will give them a solid foundation as well as

rekindle an interest in programming/coding.

Some of the topics discussed are...

1. size of int in C, how its based on the underlying architecture.

2. behavior[floating value] of float and double types and why the peculiar names.

3. printf function - far pointer - video memory - 0xB0008000

4. dangling pointer, memory leak situation

5. value types / reference types

6. benefits of 'passing by reference'

Thursday, May 14, 2009

VISUAL STUDIO 2010

Visual Studio 2010, codenamed "Hawaii", is under development. [A CTP version of is publicly available as a pre-installed Virtual Hard Disk containing Windows Server 2008 as the OS.]

The Visual Studio 2010 IDE has been redesigned which, according to Microsoft, clears the UI organization and "reduces clutter and complexity". The new IDE better supports multiple document windows and floating tool windows, while offering better multi-monitor support. The IDE shell has been rewritten in Windows Presentation Foundation (WPF); where as the internals have been redesigned using Managed Extensibility Framework (MEF) that offers more extensibility points than previous versions of the IDE that enabled add-ins to modify the behavior of the IDE. The new multi-paradigm programming language ML-variant F# programming language will be a part of Visual Studio 2010; as will be the M, the textual modelling language, and Quadrant, the visual model designer, which are a part of the Oslo initiative.

Visual Studio 2010 will come with .NET Framework 4.0 and will support developing applications targeting Windows 7. It will support IBM DB2 and Oracle databases out of the box, in addition to Microsoft SQL Server. It will have integrated support for developing Microsoft Silverlight applications, including an interactive designer. Visual Studio 2010 will offer several tools to make parallel programming simpler. In addition to the Parallel Extensions for .NET Framework and the Parallel Patterns Library for native code, Visual Studio 2010 includes tools for debugging parallel applications. The new tools lets parallel Tasks and their runtime stacks to be visualized. Tools for profiling parallel applications can be used for visualization of thread wait times and thread migrations across processor cores.

The Visual Studio 2010 code editor now highlights references; whenever a symbol is selected, all other usages of the symbol are highlighted. It also offers a Quick Search feature to incrementally search across all symbols in C++, C# and VB.NET projects. Quick Search supports substring matches and camelCase searches. The Call Hierarchy feature allows the developer to see all the methods that are called from a current method as well as the methods that call the current one. IntelliSense in Visual Studio supports a consume-first mode, which can be opted-into by the developer. In this mode, IntelliSense will not auto-complete identifiers; this allows the developer to use undefined identifiers (like variable or method names) and define those later. Visual Studio 2010 can help in this also by automatically defining them, if it can infer their types from usage.

Visual Studio Team System 2010, codenamed Rosario is being positioned for Application lifecycle management. It will include new modelling tools, including the Architecture Explorer that graphically displays the projects and classes and the relationships between them. It supports UML activity diagram, component diagram, (logical) class diagram, sequence diagram, and use case diagram. Visual Studio Team System 2010 also includes Test Impact Analysis which provides hints on which test cases are impacted by modifications to the source code, without actually running the test cases. This speeds up testing by avoiding running unneeded test cases.

Visual Studio Team System 2010 also includes a Historical Debugger. Unlike the current debugger, that records only the currently-active stack, the historical debugger records all events like prior function calls, method parameters, events, exceptions etc. This allows the code execution to be rewound in case a breakpoint wasn't set where the error occurred. The historical debugger will cause the application to run slower than the current debugger, and will use more memory as additional data needs to be recorded. Microsoft allows configuration of how much data should be recorded, in effect allowing developers to balance speed of execution and resource usage. The Lab Management component of Visual Studio Team System 2010 uses virtualization to create a similar execution environment for testers and developers. The virtual machines are tagged with checkpoints which can later be investigated for issues, as well as to reproduce the issue. Visual Studio Team System 2010 also includes the capability to record test runs, that capture the specific state of the operating environment as well as the precise steps used to run the test. These steps can then be played back to reproduce issues

- from wikipedia

The Visual Studio 2010 IDE has been redesigned which, according to Microsoft, clears the UI organization and "reduces clutter and complexity". The new IDE better supports multiple document windows and floating tool windows, while offering better multi-monitor support. The IDE shell has been rewritten in Windows Presentation Foundation (WPF); where as the internals have been redesigned using Managed Extensibility Framework (MEF) that offers more extensibility points than previous versions of the IDE that enabled add-ins to modify the behavior of the IDE. The new multi-paradigm programming language ML-variant F# programming language will be a part of Visual Studio 2010; as will be the M, the textual modelling language, and Quadrant, the visual model designer, which are a part of the Oslo initiative.

Visual Studio 2010 will come with .NET Framework 4.0 and will support developing applications targeting Windows 7. It will support IBM DB2 and Oracle databases out of the box, in addition to Microsoft SQL Server. It will have integrated support for developing Microsoft Silverlight applications, including an interactive designer. Visual Studio 2010 will offer several tools to make parallel programming simpler. In addition to the Parallel Extensions for .NET Framework and the Parallel Patterns Library for native code, Visual Studio 2010 includes tools for debugging parallel applications. The new tools lets parallel Tasks and their runtime stacks to be visualized. Tools for profiling parallel applications can be used for visualization of thread wait times and thread migrations across processor cores.

The Visual Studio 2010 code editor now highlights references; whenever a symbol is selected, all other usages of the symbol are highlighted. It also offers a Quick Search feature to incrementally search across all symbols in C++, C# and VB.NET projects. Quick Search supports substring matches and camelCase searches. The Call Hierarchy feature allows the developer to see all the methods that are called from a current method as well as the methods that call the current one. IntelliSense in Visual Studio supports a consume-first mode, which can be opted-into by the developer. In this mode, IntelliSense will not auto-complete identifiers; this allows the developer to use undefined identifiers (like variable or method names) and define those later. Visual Studio 2010 can help in this also by automatically defining them, if it can infer their types from usage.

Visual Studio Team System 2010, codenamed Rosario is being positioned for Application lifecycle management. It will include new modelling tools, including the Architecture Explorer that graphically displays the projects and classes and the relationships between them. It supports UML activity diagram, component diagram, (logical) class diagram, sequence diagram, and use case diagram. Visual Studio Team System 2010 also includes Test Impact Analysis which provides hints on which test cases are impacted by modifications to the source code, without actually running the test cases. This speeds up testing by avoiding running unneeded test cases.

Visual Studio Team System 2010 also includes a Historical Debugger. Unlike the current debugger, that records only the currently-active stack, the historical debugger records all events like prior function calls, method parameters, events, exceptions etc. This allows the code execution to be rewound in case a breakpoint wasn't set where the error occurred. The historical debugger will cause the application to run slower than the current debugger, and will use more memory as additional data needs to be recorded. Microsoft allows configuration of how much data should be recorded, in effect allowing developers to balance speed of execution and resource usage. The Lab Management component of Visual Studio Team System 2010 uses virtualization to create a similar execution environment for testers and developers. The virtual machines are tagged with checkpoints which can later be investigated for issues, as well as to reproduce the issue. Visual Studio Team System 2010 also includes the capability to record test runs, that capture the specific state of the operating environment as well as the precise steps used to run the test. These steps can then be played back to reproduce issues

- from wikipedia

Batch 9212 - Session 1 & 2

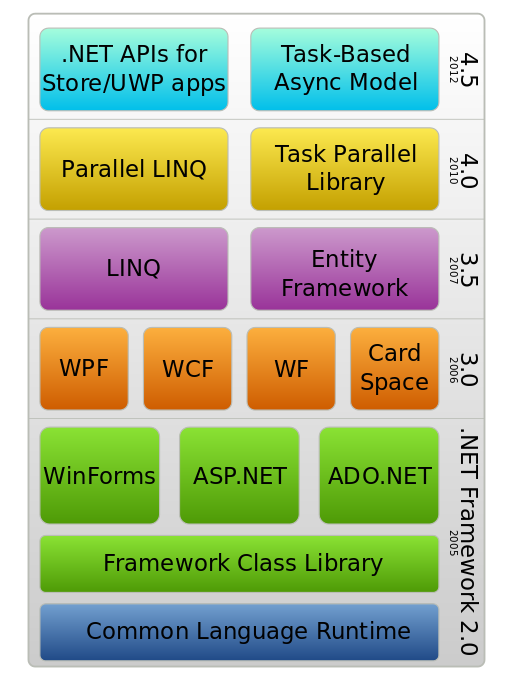

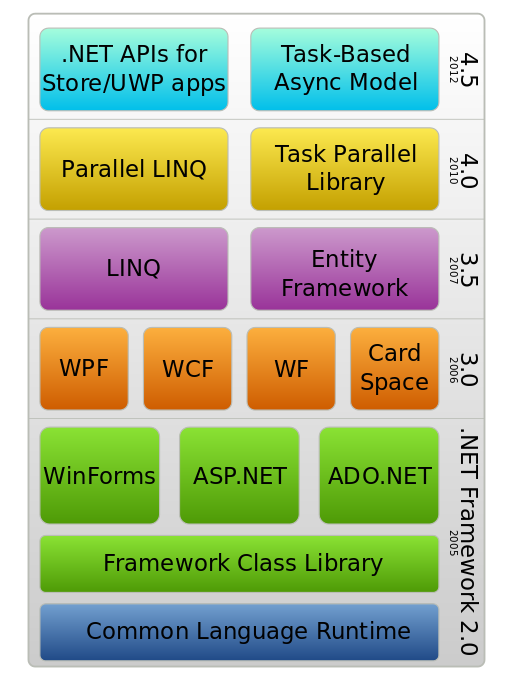

Introduction to .NET Framework and CLR

.NET is an enterprise application development platform.

It comprises of

1. LANGUAGES

- C#, VB.NET, F#, Axum, VC.NET, J#, COBOL.NET etc [more than 30 languages]

- All the above languages adhere to a Common Language Specification

- The compilers are modified to produce Intermediate Language [MSIL] code

- The languages retain their flavours.

2. DEVELOPMENT TOOLS - VS.NET is an all in one IDE where we can

- CREATE DATABASE

- DEVELOP/CODE

- DEBUG

- TEST

- SETUP & DEPLOY

- and MAINTAIN THE SOFTWARE

3. COMMON LANGUAGE RUNTIME - Layer between a .NET Program and the OperatingSystem providing services through the following components

- JIT Compiler - convert IL code to native code

- Dynamic Class Loader - loads the classes required by a program at runtime

- Garbage Collector - recollects the objects which are marked by removal

- Type Verifier - verifies whether assignments and references are type safe

4. APIs / Libraries

- WinForms

- Web Framework [ASP.NET]

- Networking

- Security

- WPF

- WCF

- WorkFlow

- ...

The principle design features include:

1. Language Independence

2. Base Class Library

3. Security and Stability

4. Platform Independence [?]

5. Open Standards

.NET is an enterprise application development platform.

It comprises of

1. LANGUAGES

- C#, VB.NET, F#, Axum, VC.NET, J#, COBOL.NET etc [more than 30 languages]

- All the above languages adhere to a Common Language Specification

- The compilers are modified to produce Intermediate Language [MSIL] code

- The languages retain their flavours.

2. DEVELOPMENT TOOLS - VS.NET is an all in one IDE where we can

- CREATE DATABASE

- DEVELOP/CODE

- DEBUG

- TEST

- SETUP & DEPLOY

- and MAINTAIN THE SOFTWARE

3. COMMON LANGUAGE RUNTIME - Layer between a .NET Program and the OperatingSystem providing services through the following components

- JIT Compiler - convert IL code to native code

- Dynamic Class Loader - loads the classes required by a program at runtime

- Garbage Collector - recollects the objects which are marked by removal

- Type Verifier - verifies whether assignments and references are type safe

4. APIs / Libraries

- WinForms

- Web Framework [ASP.NET]

- Networking

- Security

- WPF

- WCF

- WorkFlow

- ...

The principle design features include:

1. Language Independence

2. Base Class Library

3. Security and Stability

4. Platform Independence [?]

5. Open Standards

Batch 9212 - Vignan Institute of Technology

Today I have started .net training for a batch of students from Vignan Institute of Technology.

I did love to share the sessions with all.

The course content [C# module] is as follows :

1 Introduction to .NET Framework

2 Common Language Runtime / Common Language Specification

3 Language Fundamentals Part 1 – semantics / data types / operators

4 Language Fundamentals Part 2 - control flow / arrays / structs’

5 Object Oriented Concepts Part 1 – class, object, properties, constructors, inheritance

6 Object Oriented Concepts Part 2 – polymorphism [early/late binding], interfaces

7 Object Oriented Concepts Part 3 – Operator Overloading

8 Utility Classes – streams, string, math, collections

9 Advanced Language Concepts Part 1 – exception handling, delegates & events

10 Advanced Language Concepts Part 2 – multithreading

11 Advanced Language Concepts Part 3 – generics, serialization

12 Windows Applications Part 1

13 Windows Applications Part 2

14 WPF Part 1

15 WPF Part 2

16 ADO.NET Part 1 – basics

17 ADO.NET Part 2 – disconnected data model

18 ADO Sample Application 1

19 ADO Sample Application 2

20 LINQ Part 1 – introduction to linq

21 LINQ Part 2 – linq to sql/xml/dataset

22 Networking

23 Networking Sample Application

24 Security

25 Assemblies & GAC, Reflection

26 CLR Concepts – GC, etc

27 Parallel Computing - Multiple Cores

28 Unit Testing / Setup and Deployment

29 Sample Application

30 Sample Application

The course content [ASP.NET module] is as follows :

1 Introduction to Web

2 HTML

3 JavaScript

4 HTML DOM

5 Web Server Concepts

6 ASP.NET Fundamentals

7 ASP.NET Comparison to JSP/ASP/PHP

8 ASP.NET State Management

9 ASP.NET Navigation

10 ASP.NET Data Binding

11 ASP.NET Caching

12 ASP.NET Controls Part 1 – standard, login

13 ASP.NET Controls Part 2 – navigation, webparts

14 ASP.NET Controls Part 3 - validation controls

15 ASP.NET Controls Part 4 - user / custom controls

16 ASP.NET Master Pages

17 ASP.NET Themes / Skins

18 ASP.NET User Profiles

19 ASP.NET Configuration

20 XML

21 AJAX

22 Silver Light

23 Web Services / WSE

24 Live Services

25 WCF Part 1

26 WCF Part 2

27 WF

28 Card Space

29 Sample Application Part 1

30 Sample Application Part 2

I did love to share the sessions with all.

The course content [C# module] is as follows :

1 Introduction to .NET Framework

2 Common Language Runtime / Common Language Specification

3 Language Fundamentals Part 1 – semantics / data types / operators

4 Language Fundamentals Part 2 - control flow / arrays / structs’

5 Object Oriented Concepts Part 1 – class, object, properties, constructors, inheritance

6 Object Oriented Concepts Part 2 – polymorphism [early/late binding], interfaces

7 Object Oriented Concepts Part 3 – Operator Overloading

8 Utility Classes – streams, string, math, collections

9 Advanced Language Concepts Part 1 – exception handling, delegates & events

10 Advanced Language Concepts Part 2 – multithreading

11 Advanced Language Concepts Part 3 – generics, serialization

12 Windows Applications Part 1

13 Windows Applications Part 2

14 WPF Part 1

15 WPF Part 2

16 ADO.NET Part 1 – basics

17 ADO.NET Part 2 – disconnected data model

18 ADO Sample Application 1

19 ADO Sample Application 2

20 LINQ Part 1 – introduction to linq

21 LINQ Part 2 – linq to sql/xml/dataset

22 Networking

23 Networking Sample Application

24 Security

25 Assemblies & GAC, Reflection

26 CLR Concepts – GC, etc

27 Parallel Computing - Multiple Cores

28 Unit Testing / Setup and Deployment

29 Sample Application

30 Sample Application

The course content [ASP.NET module] is as follows :

1 Introduction to Web

2 HTML

3 JavaScript

4 HTML DOM

5 Web Server Concepts

6 ASP.NET Fundamentals

7 ASP.NET Comparison to JSP/ASP/PHP

8 ASP.NET State Management

9 ASP.NET Navigation

10 ASP.NET Data Binding

11 ASP.NET Caching

12 ASP.NET Controls Part 1 – standard, login

13 ASP.NET Controls Part 2 – navigation, webparts

14 ASP.NET Controls Part 3 - validation controls

15 ASP.NET Controls Part 4 - user / custom controls

16 ASP.NET Master Pages

17 ASP.NET Themes / Skins

18 ASP.NET User Profiles

19 ASP.NET Configuration

20 XML

21 AJAX

22 Silver Light

23 Web Services / WSE

24 Live Services

25 WCF Part 1

26 WCF Part 2

27 WF

28 Card Space

29 Sample Application Part 1

30 Sample Application Part 2

Saturday, May 2, 2009

The C# Programming Language Version 4.0

Visual Studio 2010 and the .NET Framework 4.0 will soon be in beta and there are some excellent new features that we can all get excited about with this new release. Along with Visual Studio 2010 and the .NET Framework 4.0 we will see version 4.0 of the C# programming language. In this blog post I thought I'd look back over where we have been with the C# programming language and look to where Anders Hejlsberg and the C# team are taking us next.

In 1998 the C# project began with the goal of creating a simple, modern, object-oriented, and type-safe programming language for what has since become known as the .NET platform. Microsoft launched the .NET platform and the C# programming language in the summer of 2000 and since then C# has become one of the most popular programming languages in use today.

With version 2.0 the language evolved to provide support for generics, anonymous methods, iterators, partial types, and nullable types.

When designing version 3.0 of the language the emphasis was to enable LINQ (Language Integrated Query) which required the addiiton of:

•Implictly Typed Local Variables.

•Extension Methods.

•Lambda Expressions.

•Object and Collection Initializers.

•Annonymous types.

•Implicitly Typed Arrays.

•Query Expressions and Expression Trees.

In the past programming languages were designed with a particular paradigm in mind and as such we have languages that were, as an example, designed to be either object-oriented or functional. Today however, languages are being designed with several paradigms in mind. In version 3.0 the C# programming language acquired several capabilities normally associated with functional programming to enable Language Integrated Query (LINQ).

In version 4.0 the C# programming language continues to evolve, although this time the C# team were inspired by dynamic languages such as Perl, Python, and Ruby. The reality is that there are advantages and disadvantages to both dynamically and statically typed languages.

Another paradigm that is driving language design and innovation is concurrency and that is a paradigm that has certainly influenced the development of Visual Studio 2010 and the .NET Framework 4.0. See the MSDN Parallel Computing development center for more information about those changes. I'll also be blogging more about Visual Studio 2010 and the .NET Framework 4.0 in the next few weeks.

Essentially the C# 4.0 language innovations include:

1•Dynamically Typed Objects.

2•Optional and Named Parameters.

3•Improved COM Interoperability.

4•Safe Co- and Contra-variance.

5.Concurrency.

Enough talking already let's look at some code written in C# 4.0 using these language innovations...

1.Dynamically Typed Objects:

In C# today you might have code such as the following that gets an instance of a statically typed .NET class and then calls the Add method on that class to get the sum of two integers:

Calculator calc = GetCalculator();

int sum = calc.Add(10, 20);

Our code gets all the more interesting if the Calculator class is not statically typed but rather is written in COM, Ruby, Python, or even JavaScript. Even if we knew that the Calculator class is a .NET object but we don't know specifically which type it is then we would have to use reflection to discover attributes about the type at runtime and then dynamically invoke the Add method.

object calc = GetCalculator();

Type type = calc.GetType();

object result = type.InvokeMember("Add",

BindingFlags.InvokeMethod, null,

new object[] { 10, 20 });

int sum = Convert.ToInt32(result);

If the Calculator class was written in JavaScript then our code would look somewhat like the following.

ScriptObect calc = GetCalculator();

object result = calc.InvokeMember("Add", 10, 20);

int sum = Convert.ToInt32(result);

With the C# 4.0 we would simply write the following code:

dynamic calc = GetCalculator();

int result = calc.Add(10, 20);

In the above example we are declaring a variable, calc, whose static type is dynamic. Yes, you read that correctly, we've statically typed our object to be dynamic. We'll then be using dynamic method invocation to call the Add method and then dynamic conversion to convert the result of the dynamic invocation to a statically typed integer.

You're still encouraged to use static typing wherever possible because of the benefits that statically typed languages afford us. Using C# 4.0 however, it should be less painful on those occassions when you have to interact with dynamically typed objects.

2.Optional and Named Parameters:

example i:

public StreamReader OpenTextFile(

string path,

Encoding encoding = null,

bool detectEncoding = false,

int bufferSize = 1024) { }

example ii:

If you have ever written any code that performs some degree of COM interoperability you have probably seen code such as the following.

object filename = "test.docx";

object missing = System.Reflection.Missing.Value;

doc.SaveAs(ref filename,

ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing);

With optional and named parameters the C# 4.0 language provides significant improvements in COM interoperability and so the above code can now be refactored such that the call is merely:

doc.SaveAs("foo.txt");

3.Improved COM Interoperability :

With previous versions of the technologies it was necessary to also ship a Primary Interop Assembly (PIA) along with your managed application. This is not necessary when using C# 4.0 because the compiler will instead inject the interop types directly into the assemblies of your managed application and will only inject those types you're using and not all of the types found within the PIA.

4.co-variance and contra-variance :

string[] names = new string[] {

"Anders Hejlsberg",

"Mads Torgersen",

"Scott Wiltamuth",

"Peter Golde" };

Write(names);

Since version 1.0 an array in the .NET Framework has been co-variant meaning that an array of strings, for example, can be passed to a method that expects an array of objects. As such the above array can be passed to the following Write method which expects an array of objects.

private void Write(object[] objects)

{

}

Unfortunately arrays in .NET are not safely co-variant as we can see in the following code. Assuming that the objects variable is an array of strings the following will succeed.

objects[0] = "Hello World";

Although if an attempt is made to assign an integer to the array of strings an ArrayTypeMismatchException is thrown.

objects[0] = 1024;

In both C# 2.0 and C# 3.0 generics are invariant and so a compiler error would result from the following code:

List

Write(names);

Where the Write method is defined as:

public void Write(IEnumerable<object> objects) { }

Generics with C# 4.0 now support safe co-variance and contra-variance through the use of the in and out contextual keywords. Let's take a look at how this changes the definition of the IEnumerable

public interface IEnumerable

{

IEnumerator

}

public interface IEnumerator

{

T Current { get; }

bool MoveNext();

}

You'll notice that the type parameter T of the IEnumerable interface has been prefixed with the out contextual keyword. Given that the IEnumerable interface is read only, there is no ability specified within the interface to insert new elements with the list, it is safe to treat something more derived as something less derived. With the out contextual keyword we are contractually affirming that IEnumerable

IEnumerable<string> names = GetTeamNames();

IEnumerable<object> objects = names;

Because the IEnumerable

Using the in contextual keyword we can achieve safe contra-variance, that is treating something less derived as something more derived.

public interface IComparer

{

int Compare(T x, T y);

}

Given that IComparer

IComparer<object> objectComparer = GetComparer();

IComparer<string> stringComparer = objectComparer;

Although the current CTP build of Visual Studio 2010 and the .NET Framework 4.0 has limited support for the variance improvements in C# 4.0 the forthcoming beta will allow you to use the new in and out contextual keywords in types such as IComparer

5.Concurrency:

Task Parallel Library which has been around for over a year has been vastly improved.

- from Intel

Google's BigTable

BigTable is a compressed, high performance, and proprietary database system built on Google File System (GFS), Chubby Lock Service, and a few other Google programs; it is currently not distributed or used outside of Google, although Google offers access to it as part of their Google App Engine. It began in 2004 and is now used by a number of Google applications, such as MapReduce, which is often used for generating and modifying data stored in BigTable, Google Reader, Google Maps, Google Book Search, "My Search History", Google Earth, Blogger.com, Google Code hosting, Orkut, and YouTube. Google's reasons for developing its own database include scalability, and better control of performance characteristics.

BigTable is a fast and extremely large-scale DBMS. However, it departs from the typical convention of a fixed number of columns, instead described by the authors as "a sparse, distributed multi-dimensional sorted map", sharing characteristics of both row-oriented and column-oriented databases. BigTable is designed to scale into the petabyte range across "hundreds or thousands of machines, and to make it easy to add more machines [to] the system and automatically start taking advantage of those resources without any reconfiguration".

Each table has multiple dimensions (one of which is a field for time, allowing versioning). Tables are optimized for GFS by being split into multiple tablets - segments of the table as split along a row chosen such that the tablet will be ~200 megabytes in size. When sizes threaten to grow beyond a specified limit, the tablets are compressed using the secret algorithms BMDiff and Zippy, which are described as less space-optimal than LZW but more efficient in terms of computing time. The locations in the GFS of tablets are recorded as database entries in multiple special tablets, which are called "META1" tablets. META1 tablets are found by querying the single "META0" tablet, which typically has a machine to itself since it is often queried by clients as to the location of the "META1" tablet which itself has the answer to the question of where the actual data is located. Like GFS's master server, the META0 server is not generally a bottleneck since the processor time and bandwidth necessary to discover and transmit META1 locations is minimal and clients aggressively cache locations to minimize queries.

- from Wikipedia

FaceBook's Haystack for photos

In a blog post today, Facebook shares some secrets of its photo infrastructure, which is based on its core innovation, Haystack. First let me give you some fun facts about Facebook Photos, which will help you understand why what they’ve done is so impressive.

•Facebook users have uploaded more than 15 billion photos to date, making it the biggest photo-sharing site on the web.

•For each uploaded photo, Facebook generates and stores four images of different sizes, which translates into a total of 60 billion images and 1.5 petabytes of storage.

•Facebook adds 220 million new photos per week or roughly to 25 terabytes of additional storage.

•At the peak there are 550,000 images served per second. (See more in this video.)

Growth at such speeds made it almost impossible for Facebook to solve the scaling problem by throwing more hardware at it; they needed a more creative solution. Enter Doug Beaver, Peter Vajgel and Jason Sobel –- three Facebook engineers who came up with the idea of Haystack Photo Infrastructure.

“What we needed was something that was fast and had the ability to back up data really fast,” said Beaver in an interview earlier today. The concept they came up with was pretty simple and yet very powerful. “Think of Haystack as a service that runs on another file system,” explained Beaver. It is a system that does only one thing -– photos -– and does it very well. From the Facebook blog post:

The new photo infrastructure merges the photo serving tier and storage tier into one physical tier. It implements a HTTP based photo server, which stores photos in a generic object store called Haystack. The main requirement for the new tier was to eliminate any unnecessary metadata overhead for photo read operations, so that each read I/O operation was only reading actual photo data (instead of filesystem metadata).

The Haystack infrastructure is comprised of commodity servers. Again, from the post:

Haystack is deployed on top of commodity storage blades. The typical hardware configuration of a 2U storage blade is: 2 x quad-core CPUs, 16GB – 32GB memory, hardware raid controller with 256MB – 512MB of NVRAM cache and 12+ 1TB SATA drives

Typically when you upload photos to a photo-sharing site, each image is stored as a file and as a result has its own metadata, which gets magnified many times when there are millions of files. This imposes severe limitations. As a result, most end up using content delivery networks to serve photos — a very costly proposition. By comparison, the Haystack object store sits on top of this storage. Each photo is akin to a needle and has a certain amount of information — its identity and location — associated with it. (Finding the photo is akin to finding a needle in the haystack, hence the name of the system.)

That information is in turn used to build an index file. A copy of the index is written into the memory of the “system,” making it very easy to find and in turn serve files at lightening-fast speeds. This rather simple-sounding process means the system needs just a third the number of I/O operations typically required, making it possible to use just one-third of the hardware resources — all of which translates into tremendous cost savings for Facebook, especially considering how fast they’re growing.

Next time I upload a photo, I will be sure to remember that

- from Gigaom

Subscribe to:

Posts (Atom)